Why is everyone talking about misinformation?

Misinformation isn’t a new problem in media. Exaggerated and completely fabricated stories have existed for centuries. But this year, digital media experts named “ads delivering near misinformation” as their top concern.

So why is it such a hot topic right now?

We’re in the midst of a global election year, with voters in more than 60 countries heading to the polls in the coming months. Elections, and the flurry of news coverage they bring, lead to an influx of consumers spending more time with digital media. More consumers online means more opportunities for brands to be seen, but it’s crucial for brands to appear in the right places — after all, three-quarters of consumers say they feel less favorably toward brands that advertise on sites that spread misinformation.

Plus, the rise of generative AI in digital media has marketers worrying that it may play a hand in expediting the creation and dissemination of misinformation across the open web and social channels. In fact, some experts claim that as much as 90% of online content could be AI-generated by 2026.

Three major misinformation challenges

Misinformation has evolved over years. Today, marketers face three main challenges when it comes to misinformation on the open web and social media. These challenges exist in both generative AI and election-related content — and far beyond.

- Fact checking: Fact checking has been a critical best practice in media for some time, and it’s more important than ever in the presence of increasing misinformation. But it requires effort and expertise from real humans, which can be time consuming and resource depleting when not accompanied by trained AI models.

- Dynamic changes: Marketers are navigating new narratives that emerge every day across platforms. This revolving door of content can lead to reputation risk for brands if not monitored correctly and in real-time.

- Nuanced classification: User-generated content (UGC) is incredibly nuanced. Given the range of content on the open web and social media — whether it be educational, comedic, sarcastic, or even violent — varied tone in UGC is a major challenge for marketers to accurately identify.

So how can advertisers overcome these challenges to detect and dodge misinformation with precision — and without limiting scale?

The importance of independent partnerships when tackling misinformation

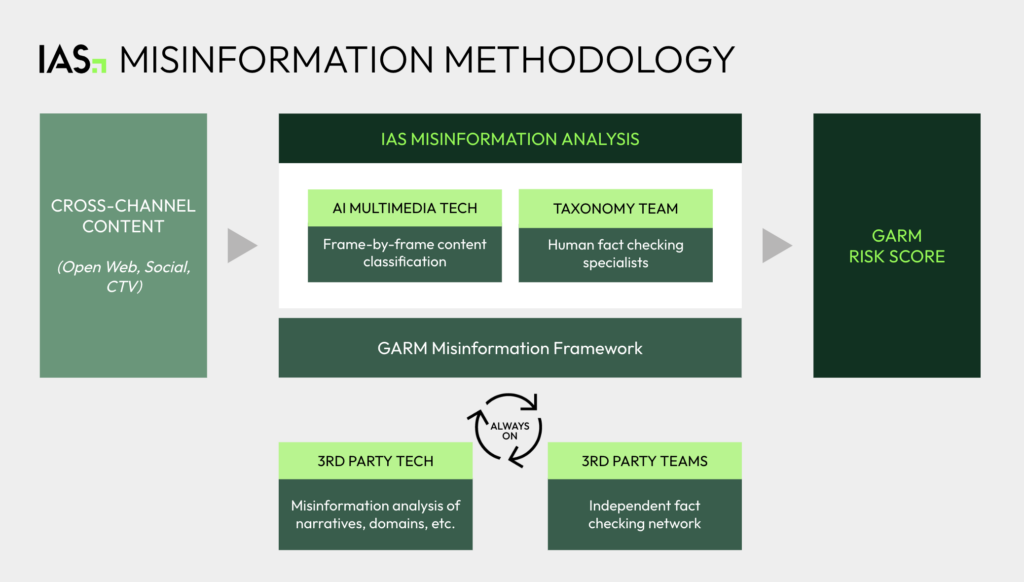

One of the most critical components of misinformation measurement are the independent, third-party partnerships that set the parameters for what content is considered misinformation. Our misinformation capability on both the open web and social media is informed by the Global Alliance for Responsible Media (GARM), the Global Disinformation Index (GDI), and an international fact checking network that enables misinformation categorization at scale, aligned with GARM standards.

Since 2021, we’ve partnered with GDI to help brands avoid misinformation, ensuring journalistic integrity and reaffirming support for quality news sites. Once IAS assesses which sites have strong correlations to sources of misinformation, GDI then evaluates the page to determine if it qualifies as misinformation or not, according to their proprietary methodology. In addition, GDI shares their list of organically detected domains for inclusion in our misinformation measurement capability. This unique combination of AI and manual review allows IAS to protect advertisers from risky content at scale. Our partnership with GDI builds on IAS’s expertise in brand safety and suitability, allowing us to align with a trusted, transparent, and independent industry body to identify any domains associated with misinformation.

Our misinformation analysis methodology, which we apply across both open web and social media channels, was trained and re-trained with always-on third-party data, and continues to evolve to include viral misinformation narratives and claims — meaning our misinformation methodology is always up to date with the latest misinformation narratives in market. With all of these signals, it’s important to note that IAS remains a neutral party.

Detecting misinformation across the open web

The internet is a big place. We’re talking billions of pages big. Hundreds of thousands of URLs are created every day, meaning new sources of misinformation can pop up at any time. The sheer amount of URLs created daily coupled with the automated nature of programmatic advertising can make it challenging for brands to completely avoid appearing on sites that publish misinformation.

Misinformation measurement across the open web is vital for advertisers to understand if their brand is protected during an election season. Most misinformation measurement solutions, however, rely solely on human review of URL lists. The issue with that is clear — it’s simply impossible for humans to be the only source of misinformation detection and do so accurately.

The single most scalable and efficient way to identify misinformation on the open web is through a combination of AI, human, and independent, third-party review, and IAS’s misinformation measurement is the industry’s most scientific and scalable solution. Our enhanced misinformation detection leverages trusted and safe AI and goes far beyond human-only review to detect emerging threats by determining which sites have a strong correlation to other well known sources of misinformation. Plus, our partnerships with GARM and GDI allow for trusted, transparent misinformation categorization that continues to evolve to bring the most sophisticated brand safety and suitability capability to market.

And we don’t stop there.

Identifying misinformation across social platforms

Social media is also at the forefront of advertisers’ minds as we approach global elections — and understandably so. With tens of thousands of thoughts, photos, and videos making their way to social media every second, it’s incredibly difficult for brands to gauge what their ads will show up next to.

Advertisers should look to independent solutions that help cut through the abundance of content and variability of social media. Without knowing where they’re appearing on the feed, brands risk appearing near content that spreads false narratives and masquerades fabricated content as real news.

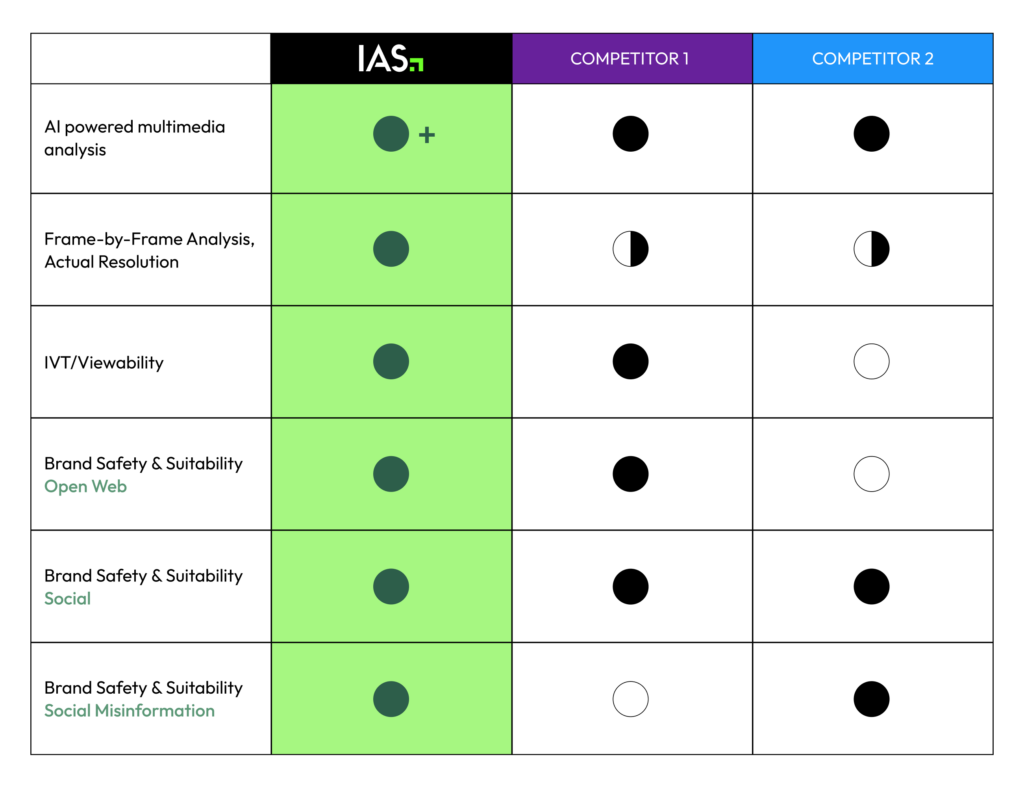

IAS’s Brand Safety and Suitability measurement is the most effective way for advertisers to safeguard and scale their brand on social media during a major election year. Powered by AI-driven Multimedia Technology,* our solutions use frame-by-frame video analysis and speech-to-text conversion to analyze thousands of signals per video and identify misinformation with accuracy. IAS’s misinformation methodology has the advantage our 3+ years of experience analyzing open web misinformation data. This extensive training allows IAS to analyze the tone of content with precision — whether it be satire, sarcasm, educational, or fear-mongering — for the most accurate categorization of misinformation on the feed.

Our Brand Safety and Suitability measurement product includes the GARM 12th category for misinformation on select platforms and is consistent with the GARM Framework. With third-party transparency into ad adjacency, advertisers can now measure and take steps to avoid running alongside misinformation. Combining our Multimedia Technology with trusted third-party misinformation assessment providers creates a market-leading misinformation methodology that more accurately identifies misinformation at scale.

How can misinformation be avoided?

Advertisers have traditionally turned to keyword blocking in an attempt to avoid appearing near words and phrases that don’t align with their brand — but this outdated approach doesn’t cut it anymore.

Keyword blocking doesn’t take into account the context, sentiment, or emotion of a given webpage. Without an understanding of the true intention behind a piece of content, brands miss out on reach and block diverse content, diverse content creators, or other information that consumers seek out.

While measurement gives transparency needed to detect misinformation, advertisers can go even further to avoid misinformation with more accuracy and scalability than keyword blocking. Products like IAS’s Context Control Avoidance helps advertisers actively avoid sources of and topics about misinformation on the open web while still providing the opportunity for expanded reach. Context Control Avoidance technology leverages IAS’s semantic intelligence engine, which advertisers can then use to exclude not only the sources identified as misinformation, but all content relating to misinformation, fake news, and conspiracy theories.

Only IAS offers the ability to choose from GARM-defined risk levels and media types, like entertainment, politics, news, and more, to give advertisers a tailored approach to avoiding harmful misinformation that still allows for scale and support for quality journalism.

Additionally, Brand Safety and Suitability measurement through our flagship Total Media Quality product suite on social platforms can inform advertisers’ suitability strategies. While the approach varies by platform due to tools available, brands can identify trends and optimize away from misinformation with granular reporting insights.

Here’s how brands can avoid misinformation as a result of misinformation measurement on social:

- Shift media budgets: Test shifting budgets from placements where misinformation may be more present and measure the impacts.

- First-party tools: Where available, consider activating platform first-party tools to avoid certain GARM risk levels, inclusive of misinformation, and monitor performance to ensure brand safety and suitability performs as expected.

- Third-party optimization products: On select platforms, consider Brand Safety Exclusion or Block Lists, which offer protection to avoid running ads next to unsuitable content or publishers.

And we offer brands a number of methods to avoid misinformation on open web:

- IAS Brand Safety: Automatically avoid misinformation through IAS’s partnership with the GDI by simply setting your risk threshold to moderate or stricter, for our standard Offensive Language & Controversial Content category. We recommend that clients only interested in avoiding sources of misinformation activate via brand safety.

- IAS Context Control: IAS Context Control misinformation segments are ideal for advertisers who wish to extend protection beyond just sources of misinformation and include protection for topics related to misinformation. With Context Control, you have more granularity, as we have seven different segments available depending on your risk threshold and content types (such as news, entertainment, and video gaming), all aligned to the GARM framework.

Taking action on misinformation today

Advertisers will always want to avoid misinformation — but not all methods of doing so are created equal. An overly risk-averse approach, like stopping ad spend in news, prevents your brand from appearing in highly scalable contexts and hurts quality journalism. A strategic and thoughtful approach to avoiding misinformation allows brands to scale confidently on channels where it matters most this election season.

Here are the three key ways IAS gives you the most comprehensive and accurate approach to detecting and avoiding misinformation:

- We’re everywhere: Our misinformation capability is built to scale across channels. Starting with open web and social, our model is engineered to rapidly expand soon to CTV and other emerging channels.

- We’re more accurate: Our trusted AI-driven frame-by-frame analysis delivers up to 74% more accurate brand suitability reporting across social media platforms vs. limited sample frame analysis across GARM categories.

- We partner with independent networks: We leverage a network of third-party service providers and fact checking agencies from around the world, including human fact checkers.

A comprehensive solution that protects all ends of the digital ad cycle is critical. End-to-end, AI-driven measurement coupled with optimization solutions that are tailored to each brand’s respective needs are key to detecting and avoiding misinformation on the internet’s most prolific and unpredictable environments. Ensure you’re choosing a partner who can safeguard and scale your brand — and stay away from point solutions who can’t cover open web or provide limited misinformation capabilities:

You can avoid misinformation, protect your brand, and continue to scale this election season. Keep reading about how IAS helps you avoid misinformation, and reach out to an IAS representative today to get started.

*Methodology may vary by platform.

Share on LinkedIn

Share on LinkedIn Share on X

Share on X